Analyzing calls requires the introduction of multiple APIs from multiple levels of a technology stack. Dolby.io’s Media Enhance API, however, is one of the multiples of APIs with which to refine an analysis of a recorded stream.

In the following blog you refine an analysis of Symbl.ai recorded streams with Dolby.io’s Media Enhance API. You analyze your Symbl.ai recorded stream with Symbl.ai’s Python SDK to produce a time series analysis of sentiments. After your initial analysis does not provide a speech event, you refine your analysis by removing background noise with Dolby.io’s Media Enhance API. Upon being refined by Dolby.io’s Media Enhance API, Symbl.ai’s Python SDK provides you with a new analysis replete with new speech events for which an improved time series analysis arises.

Your journey traverses through an 1) introduction, 2) a Symbl.ai analysis of a Dolby.io recorded call with

Jayson DeLancey‘s Postman public workspace, 3) the refinement, 4) the improved analysis.

1) Introduction: Dolby.io’s Media Enhance API

One such strategy for improving the quality of a recorded stream for creating wildly connected calls is through the use of the Media Enhance API present on Dolby.io. The Media Enhance API works to remove the noise, isolate the spoken audio, and correct the volume and tone of the sample for a more modern representation of the speech.

The Dolby.io Media Enhance API is designed to improve the sound of your media. Our algorithms analyze your media and make corrections to quickly improve and produce professional quality audio. By using this API you can automate typically labor intensive and specialized media processing tasks to improve:

- Speech leveling

- Noise reduction

- Loudness correction

- Speech isolation

- Sibilance reduction

- Plosive reduction

- Dynamic equalization

- Tone shaping

With these features, developers may refine their analyses of wildly connected calls in a way that exposes a recorded stream to one of many enhancements for overall improved analyses.

2) How to Analyze a Call with Symbl.ai

Jayson DeLancey, the Developer Relations Manager at Dolby.io, produced a Postman Public Workspace as a guide for developers to embark upon the process of analyzing recorded streams with Symbl.ai. A public workspace empowers developers to collaborate when building a project with a convenient Graphical User Interface (GUI) for making RESTful API requests.

Called Transcribe Media with Symbl.ai, the public workspace is available here: https://www.postman.com/dolbyio/workspace/dolby-io-community/overview. To use the public workspace, you’ll need to:

- Fork the collection into your own workspace

- Fork the environment to add your API Keys for both Dolby.io and Symbl.ai accounts.

Within the Public Workspace, you navigate from folder to folder for what might be a typical series of steps for extending beyond mere automatic speech recognition to analyses.

To summarize the Dolby.io steps:

- Authenticate with Dolby.io

- Find a Meeting

- Identify a Recording

- Download a Recording

To summarize the Symbl.ai steps,

- Authenticate

- Submit an MP3 Recording

- Get Transcript

With these steps you should be able to come up with a recorded call. If you have not, Dolby.io’s publically available onboarding provides you with access to a video, which may serve your purpose in lieu of a recorded call. Symbl.ai’s Conversation Intelligence API platform analyzes both video as well as audio so the switch from call to video does not affect the outcome at all. Here is the video file:

Analyzing the Call with Symbl.ai’s Python SDK

The next step is to analyze the audio or video with Symbl.ai’s Python SDK. You could use JavaScript or cURL, however, the Python SDK provides you with an end-to-end solution for both handling the processing as well as the post-processing analysis through the application of libraries such as Numpy, Matplotlib, or Pandas.

The first step is to import Symbl.ai’s Python SDK into your environment.

import symblThe next step is to configure a payload for a call to Symbl.ai’s video processing API.

payload = { 'url':'', } credentials_dict = {'app_id': '', 'app_secret': ''}The next step is to make the call to Symbl.ai’s video processing API.

conversation = symbl.Video.process_url(payload=payload, credentials=credentials_dict)The next step is to save the call into an object through which sentiments may be fetched before being appended into empty initialized arrays:

response = conversation.get_messages(parameters={'sentiment': True}) timestamps, polarities = [], [] for message in response.messages: timestamps.append(message.start_time) polarities.append(message.sentiment.polarity.score)The next step is to import Pandas, which brings with it both Numpy as well as Matplotlib, into your environment.

import pandas as pdLast but not least is to configure your DataFrame for plotting.

df = pd.DataFrame({"timestamps": timestamps,"polarities": polarities}) df.plot(x="timestamps", y="polarities")Here is the full code sample:

import symbl payload = {

'url':'',

}

credentials_dict = {'app_id': '', 'app_secret': ''}

conversation = symbl.Video.process_url(payload=payload, credentials=credentials_dict)

response = conversation.get_messages(parameters={'sentiment': True})

timestamps, polarities = [], [] for message in response.messages:

timestamps.append(message.start_time)

polarities.append(message.sentiment.polarity.score)

import pandas as pd df = pd.DataFrame({"timestamps": timestamps,"polarities": polarities})

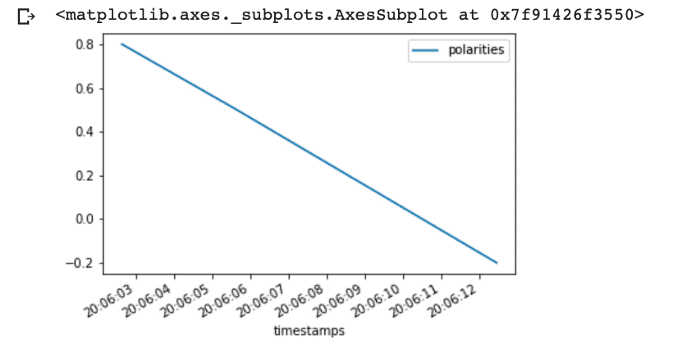

df.plot(x="timestamps", y="polarities")The result is that the video analyzed by Symbl.ai’s Python SDK is incapable of producing a proper time series analysis of speaker events, since the background noise clouds the speech events.

The resulting graph shows you that background noise affects your ability to provide improved analyses of calls. The next step is to remove the background noise with Dolby.io’s Media Enhance APIs.

3) Refining Symbl.ai’s Analysis of Streams with Dolby.io’s Media Enhance API

The first step to refining Symbl.ai’s analysis of streams is to sign up for an account at Dolby.io: https://dashboard.dolby.io/signup/

After you have signed up the next step is to configure a few API calls, which you will do with cURL to help speed up the process. The first API call is to transform your API key and secret into an access token. You can use token authentication or alternatively the x-api-key for authentication.

For x-api-key authentication, you pass only the api key in the x-api-key header. https://docs.dolby.io/media-apis/docs/authentication#api-key-authentication

For token authentication, you generate a token and then pass it in as an Authorization Bearer header. https://docs.dolby.io/media-apis/docs/authentication#oauth-bearer-token-authentication

1. Convert your combined API key and secret into a base 64 key:

export BASE64_KEY_SECRET=`echo -n "$DOLBYIO_API_KEY:$DOLBYIO_API_SECRET" | base64`2. After exporting the keys, the next step is to obtain your JSON Web token (JWT).

curl -X POST 'https://api.dolby.com/media/oauth2/token'

--header 'Authorization: Basic $BASE64_KEY_SECRET'

--header 'Content-Type: application/x-www-form-urlencoded'

--data 'grant_type=client_credentials'3. With the JSON Web token returned from your API call, you transfer the video from earlier to Dolby.io for refinement in the following way:

curl -X POST https://api.dolby.com/media/enhance

--header "Authentication: Bearer $DOLBYIO_ACCESS_TOKEN"

--data '{

"input": "s3://dolbyio/public/shelby/airplane.original.mp4",

"output": "dlb://out/example-enhanced.mp4"

}'In our case, you will simply upload that same file to Google Cloud to keep it as a URL or use the URL our team has uploaded to the cloud.

4. Improved Analysis

The next step to demonstrating the improved analysis is to analyze the same audio/video file for speech events. Here is the code snippet:

import symbl

payload = { 'url':'https://storage.googleapis.com/support-test-1/EnhancedMediaFile.mp4', }

credentials_dict = {'app_id': '', 'app_secret': ''}

conversation = symbl.Video.process_url(payload=payload, credentials=credentials_dict)

response = conversation.get_messages(parameters={'sentiment': True})

timestamps, polarities = [],

[]

for message in response.messages:

timestamps.append(message.start_time)

polarities.append(message.sentiment.polarity.score)

import pandas as pd

df = pd.DataFrame({"timestamps": timestamps,"polarities": polarities})

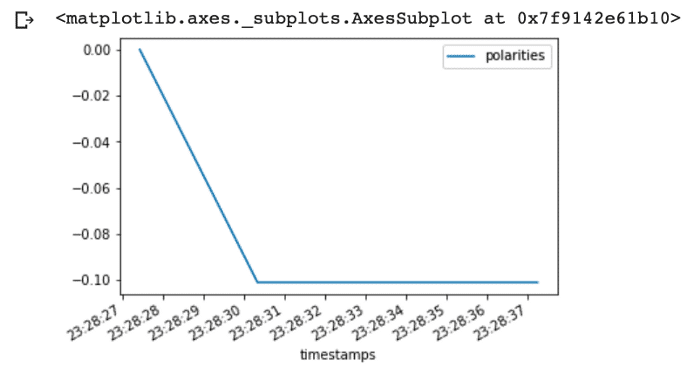

df.plot(x="timestamps", y="polarities")The result is a significant improvement over the previous video. As opposed to the previous video where no speech events were detected, the graph shows that speech events have now been detected.

Dolby.io’s Media Enhance API therefore provides you with the ability to improve Symbl.ai analyses of streams. Although there is still more work to do, anyone who becomes proficient in Dolby.io’s suite of Media Enhance APIs is set to transform a poor audio experience into a rich opportunity for analysis.

What’s Next

Contrasting your refined analysis of a timeseries with a waveform spectogram may be a great next step for you in attaining a deeper understanding of a wildly connected call. The points of the timeseries analysis may correlate with the points of the spectogram to provide insights into the context of event driven conversations.

Dolby.io’s Developer Relations Team under the the management of Jayson DeLancey provides a guide on how to create a spectogram analysis of a recorded stream. The guide covers how to import dependencies such as Numpy, Matploblib, or Scipy to derive the spectogram. With Python you refine the recorded stream just as is done in your current configuration. With the refined stream, you generate a spectrogram.

In addition to adding bells and whistles like a spectrogram to your analyses like labels, you may consider how Symbl.ai’s Python SDK provides access to many of Symbl.ai’s APIs. In particular, you can enable Symbl.ai’s Python SDK on the real-time Telephony API (i.e., Telephony API (in Real-time) or any one of the Async APIs for voice, video, or messaging. A complete list is here:

- Async Text API

- Async Audio (File) API

- Async Audio (URL) API

- Async Video (File) API

- Async Video (URL) API

In addition, Symbl.ai’s Python SDK contains links to enable Symbl.ai on its Telephony APIs with Python special methods. The methods for dialing into a call, work on the Session Initiation Protocol (SIP). With Symbl.ai’s Python SDK dialed into a call, your in a position to expand the experiences around calls in real-time. After connecting to a SIP call in real-time, you subscribe to Symbl.ai’s events such as events for contextual insights like follow-ups, questions, or action-items, or you create a request to deliver a post-meeting summary directly to your inbox.

Community

Symbl.ai‘s invites developers to reach out to us via email at [email protected], join our Slack channels, participate in our hackathons, fork our Postman public workspace, or git clone our repos at Symbl.ai’s GitHub.